Let’s follow up this potentially dramatic development with an example: We ask ourselves what factors are driving non-commercial spaceflight (measured by the number of non-commercial launchers sent into space). You are probably wondering why we are now looking at this particular whacky research question. The reason is that data-driven algorithms have found a fascinating answer to this question: the number of PhDs in sociology awarded in the US!

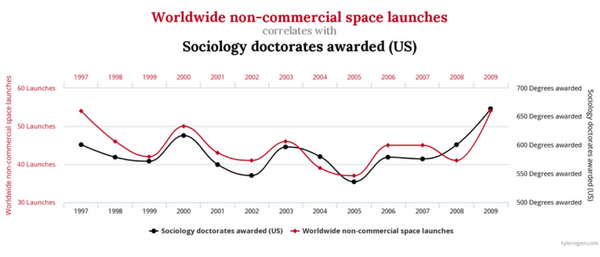

Surely you would have arrived at different factors using literature-based theory. You may even doubt this fascinating insight. If you have doubts, consider the graph below. The sociology doctorates explain launch vehicle development in an almost fantastic way; they actually make all the peaks and valleys of space activity almost coincide! (More fantastic correlations can be found here).

Worldwide non-commercial space launches. Source: Tylervigen.com

Of course, some of you will begin to doubt: Is this really a robust correlation? Good machine learning algorithms are trained to find robust correlations – so it is here. Suppose we use only the 1997 to 2003 data in the graph above to “teach” the algorithm the relationship between sociology PhDs and launchers. Would this give the algorithm good predictive power for the years after 2003? The answer is quite obviously: yes. The correlation is decently robust over time. So the algorithm has indeed passed the robustness test!

So what have we seen? Perhaps the correlation shown can be explained by the fact that both variables are influenced by the state of the US economy and thus the size of the US budget. Theory-based thinking (or, quite simply, common sense) quickly makes us realise that we are dealing here with a correlation of two variables that cannot be attributed to a causal relationship. This is exactly what applies to a very large part of the so-called machine learning paradigm at the moment. Machines simply learn to recognise various “patterns” in data using algorithms. But they are blind to the difference between causality and correlation. Theory- and model-based data analysis, on the other hand, aims at causality – and thus has something potentially important ahead of algorithms.

Now let’s look at a subject area that is a little closer to the world of management than space travel: what about the connection between corporate social responsibility (CSR) and a company’s profitability?

There are ardent CRS advocates who like to point out that firms that implement CRS programmes also show higher profitability. Algorithms would have no trouble replicating this pattern with a suitable sample of firms. So is CSR the key to profitability? Before you draw a data-based and algorithm-assisted conclusion on such questions, turn on the theoretical thinking once again. It gives the impetus to ask a number of almost slightly nagging questions: Does the data really show that CSR programmes lead to higher profitability? Or is the correlation of both variables consistent with the hypothesis that it tends to be successful firms with high profitability that launch CSR programmes because they can afford to? Do the firms in our sample operate in markets where consumers are particularly sensitive to ethical issues? And to what extent are these findings so transferable to firms in other business sectors? Practically none of the existing machine learning algorithms give us reliable clues to the answers to these questions. Here, people have to switch on their heads and “theoretically” brood over these questions.

Quite a few academics, tending to be particularly active in the humanities and social sciences, hold the view that, beyond the examples mentioned, only theory-based causal relationships represent true gains in knowledge and are thus of practical use. You like to call the algorithm a spectre. Are you of the same opinion? When does the use of an algorithm-driven analysis make sense to you?

The article was originally published in

Zeitschrift für Organisationsentwicklung (ZOE) of 15.04.2018, issue 02, page 35 – 37, “Schreckgespenst Algorithmen – Das Ende der wissenschaftlichen Theorie? A reflection”

About the author(s)

Prof. Dr. Johannes Binswanger Professor of Economics

Relevant executive education

Newsletter

Get the latest articles directly to your inbox.

Share article

More articles

The Future of Work and the Central Role of Diversity & Inclusion

Leadership in Transition: Five Trends of Modern Leadership

The future of work – also relevant for the legal market?

Why inclusive leadership matters for every generation

Do young lawyers need leadership, too? Classification according to generations – slightly arbitrary, but useful